Jennifer Cook-Chrysos/Whitehead Institute

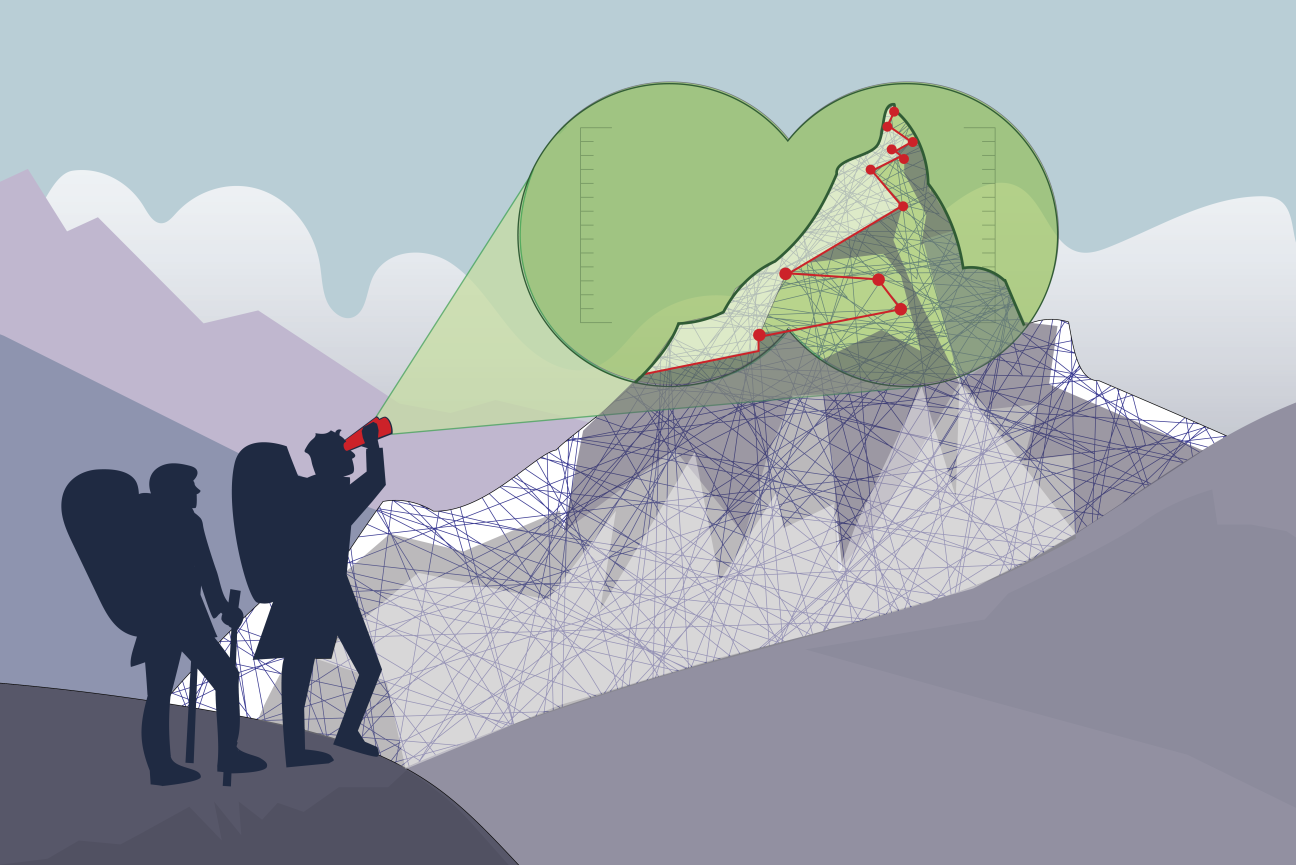

Scaling a mountain of data requires new computational tools

This story is part of our series, Tool and Method Development at Whitehead Institute. Click here to see all stories in this collection.

With advances to experimental tools in science, researchers can collect massive amounts of data, on a scale beyond what previous generations could have imagined. But then a new challenge arises: how do they make sense of it? As the scale and complexity of available data has grown, computation has become a crucial aspect of biological research. Researchers often have to sift through or synthesize tens of thousands of data points. With the right computational tools, researchers can gain novel insights into previously inscrutable aspects of how our cells function, or how a disease originates.

When Whitehead Institute researchers have found themselves asking questions that require making sense of large or complex datasets, often they have needed to develop the computational tools to do so themselves, or in collaboration with computer scientists. These include a variety of powerful algorithms and machine learning tools created in order to answer ambitious questions that otherwise could not be addressed. The problems they have tackled range from how cancer cells evolve and spread, to how RNA viruses shapeshift their genomes to a number of questions in between.

Tracing the spread of cancer with molecular recorders

Cancers typically become most deadly when they metastasize, or spread throughout the body, invading new tissues to form more tumors. How and when cancer cells gain the ability to metastasize is a complicated question, tending to happen invisibly, cryptic events lost among many cancer cell divisions. Whitehead Institute Member Jonathan Weissman, also a professor of biology at the Massachusetts Institute of Technology (MIT), an HHMI investigator, and a member of the Koch Institute at MIT, wanted a way to track cancer as it spreads and record the moments of change towards metastasis.

The crux of the approach is a CRISPR-based tool developed by then-postdoctoral researcher in the lab Michelle Chan that barcodes cells with a “DNA scratchpad” to which the cells add new marks each time they divide, so that new generations of cells are marked differently than their predecessors. In this way, cells contain a record of their lineage in their DNA. Weissman’s lab first used this tool to track embryonic development. Then, led by lab members Jeffrey Quinn, a postdoctoral researcher, and Matthew Jones, a graduate student, in collaboration with Nir Yosef, a computer scientist at the University of California at Berkeley, and Trever Bivona, a cancer biologist at the University of California at San Francisco, they applied it to a line of lung cancer cells inserted into mice.

The cancer cells grew and spread, and then were sequenced to obtain the unique barcode of each cell. Weissman and Yosef developed an algorithm that can reconstruct the cells’ family tree based on these barcodes, a difficult computational challenge that requires determining how tens of thousands of cells relate to each other. The family trees reveal where and when different branches became metastatic. The algorithm can distinguish between highly metastatic lineages in which the metastatic cells are all descended from one or a few cells that made the jump to become metastatic versus those in which the metastatic cells are descended from many different branches that each became metastatic separately. Further computation allowed the researchers to determine the paths that the cells took through the body, or the order in which they invaded new tissues as they spread.

Collectively, these tools provide a detailed record of the cancer’s progression, and with this Weissman’s lab was able to uncover a variety of insights into metastasis. They observed much more heterogeneity in outcomes than they expected, given that all of the cancer cells came from the same original line; some lineages spread fast and far while others never even left the lungs. The researchers found differences between the lineages that helped explain these different outcomes: certain genes being expressed were linked to increased aggressiveness and metastasis while others played the opposite role. The researchers also discovered that a tissue between the lungs, the mediastinal lymph tissue, acted as a metastatic hub that aggressive cancer cells used as a gateway to other tissues.

These findings could provide new leads for cancer therapies as well as better prognostic tools. Weissman’s hope is to ultimately make cancer progression as predictable as a simple physics equation: if you know the inputs at the start, you can tell exactly what a cancer is going to do. Making cancer more predictable would also make it more manageable.

Weissman intends to adapt these tools to shed light on other enigmatic processes in biology. His lab is working on expanding their barcoding system so that it contains even more information. Cells would note everything that happens to them in their internal molecular recorders, which would serve the same role as a black box in a plane. This data, when fed into the right algorithms, could provide a whole new level of insight and predictability in biology.

Capturing viruses in the act of shape-shifting their genomes

RNA is a versatile molecule. Many of the deadliest viruses in the world, including influenza, rabies, Ebola, human immunodeficiency virus (HIV), and SARS-CoV-2—the virus responsible for the Covid-19 pandemic—have genomes made of RNA, rather than DNA. The tiny HIV genome has only nine genes, and yet it uses these to create fifteen proteins with which it can invade cells and reproduce. Former Whitehead Institute Fellow Silvi Rouskin, now a faculty member at Harvard Medical School, suspected that the virus was able to create so many different proteins by folding its RNA genome into different shapes, each of which left different sections of the genome available to be read and used to create proteins. If this were true, then researchers might be able to target the shapes to incapacitate RNA viruses, for example by locking them into one shape and preventing them from shifting to create a necessary protein.

Rouskin’s suspicion aligned with research theory: because of RNA’s biochemical and biophysical properties, many RNA sequences have multiple solutions for how they can fold in two or three dimensions. The challenge would be to verify if the theory was borne out in practice: could Rouskin show an RNA genome taking on different shapes, for example in HIV?

To answer that question, Rouskin first created a tool called DMS-MaPSeq that tags the open bases—the building blocks of the genome—on RNA, and then creates mutations at these points when the RNA is converted to DNA. Then Rouskin and collaborators at the Walter & Eliza Hall Institute of Medical Research and elsewhere created an algorithm, DREEM, that could infer an RNA molecule’s shape based on which bases were mutated, or marked as open. Unlike previous approaches that looked at lots of molecules and predicted the most likely shape as an overall average, the DREEM algorithm could recognize when different RNA molecules folded into different shapes. The algorithm could identify unpredicted shapes and provide a ratio of how commonly different shapes appeared in relation to each other.

When Rouskin, along with lab members Phillip J. Tomezsko, then a graduate student at Harvard University, and Paromita Gupta, then a senior technician, and outside collaborator Vincent D.A. Corbin, a postdoctoral researcher at Walter and Eliza Hall Institute of Medical Research, used these tools on HIV, they found that the virus does indeed fold its genome into multiple shapes in many places, and that this folding determines which sections of RNA are available to be used to make protein.

Scanning electron micrograph of HIV-1 (in green) budding from cultured lymphocyte.

CDC Public Health Image Library

Then, before Rouskin had even published her paper on HIV, a new virus started making headlines that appeared to be a perfect candidate for her tools: SARS-CoV-2. Rouskin’s lab quickly went to work, and were the first to publish the complete genome structure of the new virus. Once again, Rouskin found that her subject could fold its genome into multiple shapes in various locations. Rouskin identified one of these locations as a promising drug target. She and collaborators are now working on developing drugs that could treat Covid-19—for which there are thus far very limited treatment options—based on the findings from her work with the DREEM algorithm.

Making precision predictions for microRNA

Cells must carefully calibrate the amount of protein that they make from different genes at different times in order to maintain their identities and perform the many tasks that keep the body running. One way that cells control protein levels over time is by modulating the speed at which they break down messenger RNAs, the molecules that are copied from DNA to act as templates for proteins. The faster that a messenger RNA is broken down, the less protein can be produced from its template.

One of the main tools that cells can use to degrade messenger RNA is microRNA, tiny RNA sequences that pair to matching sequences on certain messenger RNAs and initiate their destruction. Knowing which messenger RNAs a microRNA will target – or knowing which microRNAs can degrade a messenger RNA – is important in order to understand cell biology, and can help researchers develop medicinal drugs that work by regulating RNA. However, there are far too many potential pairings between microRNAs and targeting sites on messenger RNAs for researchers to be able to measure all of them.

Instead, Whitehead Institute Member David Bartel, also a professor of biology at Massachusetts Institute of Technology (MIT) and an HHMI investigator, has developed a tool that predicts the targets of microRNAs and the success with which each microRNA will regulate each target, based on research from his lab that revealed patterns in how microRNAs target messenger RNAs. The tool, called TargetScan, is co-run by the Whitehead Institute Bioinformatics and Research Computing group and has been made available online for any researcher. TargetScan has become a go-to resource, cited in over 19,000 papers and visited by tens of thousands of users each month.

For early iterations of TargetScan, the researchers looked at the average behavior of many microRNAs to predict how well they would regulate different messenger RNAs, because there was not a good way to get measurements, or build predictions, at an individual level. However, more recently, researchers in Bartel’s lab took TargetScan to a new level of precision. Led by former graduate students in the Bartel lab Sean McGeary and Kathy Lin, they developed an experimental and computational approach to get individualized predictions for each microRNA. First, they collected a massive amount of data on how well each of six microRNAs regulated a large set of messenger RNAs. The results upended several assumptions about microRNA behavior, such as an expected hierarchy in how well different microRNAs would bind to different targets based on the match between their sequences.

The researchers then used the high-resolution data they had gathered on the six microRNAs to train a convolutional neural network to predict the targeting efficacy of any microRNA for any messenger RNA target site. This computational model is being incorporated into TargetScan, and the precision it adds to the resource’s predictions will benefit many researchers who are studying microRNA targeting of messenger RNA in the context of disease or a number of other areas.

Uncovering new aspects of complex diseases

Some diseases, such as cystic fibrosis or Tay-Sachs, are caused by a single gene, and in these cases, figuring out the link between the gene and disease progression can be relatively straightforward. Conversely, complex diseases are caused by a combination of multiple genes and environmental factors, and in cases in which many genes are implicated, such as diabetes, numerous cancers, and a variety of immune and neuropsychiatric disorders, teasing out the role of each gene in the disease can be a challenge.

For many complex diseases, data already exist that reveal which genetic alterations play a role. These genetic alterations, or genetic variants, are single letter changes in one’s DNA code. Some of these genetic changes are inside genes and impact proteins, but most are found in the DNA sequences between genes, which makes it difficult to determine what role these genetic variants play in the course of the disease. Whitehead Institute Member Olivia Corradin, also an assistant professor of biology at MIT, wanted to analyze these data using new methods in order to tackle the question of how the variants contribute to disease.

Corradin was interested in diseases that impact multiple tissues during the course of the disease. She decided to start by looking at multiple sclerosis (MS), a degenerative disease in which the immune system attacks the protective sheaths on neurons, and which can lead to debilitating symptoms over time. The genetic variants that are most strongly associated with MS risk act in the immune system, but Corradin wondered if some of the other associated variants might be playing roles elsewhere—after all, MS is both an autoimmune and a neurodegenerative disease.

Oligodendrocyte image.

Olivia Corradin/Whitehead Institute

Corradin developed the outside variant approach, a model that can determine which cell types different disease-associated genetic variants are acting in, potentially revealing unrecognized disease pathways. The approach works by looking at how disease-associated variants interact with other variants that are physically close to them in three-dimensional space in the nucleus. Variants that are not adjacent to known disease-associated variants in the linear sequence of the chromosome can end up in close proximity to them in the folds and loops of the genome, and can in these cases work together to modify the expression of the same target gene. In the outside variant approach, a computing model runs through huge numbers of permutations to identify instances when variants in close 3D proximity, which act on the same target, confer higher disease risk collectively than the known disease-associated variant confers alone. The researchers determine what cell type the linked variants collectively act in, and follow up on this lead to see if that cell type plays a role in the disease.

When Corradin, along with lab technician Anna Barbeau and collaborator Daniel Factor, a senior scientist at Convelo Therapeutics, applied the outside variant approach to MS, their findings confirmed that most of the MS-associated variants act in the immune system. However, they also revealed that some of the variants act in the central nervous system: these variants negatively impact oligodendrocytes, the cells that build and repair the protective sheaths around neurons that are destroyed in MS. Corradin hopes that this insight might provide a new avenue for MS therapies that improve the growth and repair of neuronal sheaths.

Corradin has also used the approach to look at breast cancer, and is now using it to explore a number of immune and neuropsychiatric disorders, as well as to better understand the frequent overlap in incidence of these two types of disorders.

Finding patterns in a sea of data

In each of the cases described here, Whitehead Institute researchers and their collaborators had to build the computational tools to make the data that they had gathered capable of interpretation. Each algorithm had to be carefully planned, then adjusted through many rounds of trial and error, its biases examined and eliminated—much in the same way that an experiment is designed in the lab—in order for the research to proceed. Then, when the algorithms were deployed, they helped the researchers to discover valuable new insights into subjects from cancer to viruses to complex diseases and basic cellular function. As the tools for gathering data continue to evolve and the questions that researchers want to ask grow in scale and complexity, Whitehead Institute researchers are ready to design new algorithms that will sift through the seas of data to find once-hidden patterns and reveal the inner workings of biology.

Contact

Communications and Public Affairs

Phone: 617-452-4630

Email: newsroom@wi.mit.edu